mirror of

https://github.com/terribleplan/next.js.git

synced 2024-01-19 02:48:18 +00:00

sitemap.xml and robots.txt example (#4163)

This example app shows you how to set up sitemap.xml and robots.txt files for proper indexing by search engine bots.

This commit is contained in:

parent

a806c16713

commit

3f6834dfec

42

examples/with-sitemap-and-robots-express-server/.eslintrc.js

Normal file

42

examples/with-sitemap-and-robots-express-server/.eslintrc.js

Normal file

|

|

@ -0,0 +1,42 @@

|

|||

module.exports = {

|

||||

parser: "babel-eslint",

|

||||

extends: "airbnb",

|

||||

env: {

|

||||

browser: true,

|

||||

jest: true

|

||||

},

|

||||

plugins: ["react", "jsx-a11y", "import"],

|

||||

rules: {

|

||||

"max-len": ["error", 100],

|

||||

semi: ["error", "never"],

|

||||

quotes: ["error", "single"],

|

||||

"comma-dangle": ["error", "never"],

|

||||

"space-before-function-paren": ["error", "always"],

|

||||

"no-underscore-dangle": ["error", { allow: ["_id"] }],

|

||||

"prefer-destructuring": [

|

||||

"error",

|

||||

{

|

||||

VariableDeclarator: {

|

||||

array: false,

|

||||

object: true

|

||||

},

|

||||

AssignmentExpression: {

|

||||

array: true,

|

||||

object: false

|

||||

}

|

||||

},

|

||||

{

|

||||

enforceForRenamedProperties: false

|

||||

}

|

||||

],

|

||||

"import/prefer-default-export": "off",

|

||||

"jsx-a11y/anchor-is-valid": "off",

|

||||

"react/react-in-jsx-scope": "off",

|

||||

"react/jsx-filename-extension": [

|

||||

"error",

|

||||

{

|

||||

extensions: [".js"]

|

||||

}

|

||||

]

|

||||

}

|

||||

};

|

||||

16

examples/with-sitemap-and-robots-express-server/.gitignore

vendored

Executable file

16

examples/with-sitemap-and-robots-express-server/.gitignore

vendored

Executable file

|

|

@ -0,0 +1,16 @@

|

|||

*~

|

||||

*.swp

|

||||

tmp/

|

||||

npm-debug.log

|

||||

.DS_Store

|

||||

|

||||

|

||||

.build/*

|

||||

.next

|

||||

.vscode/

|

||||

node_modules/

|

||||

.coverage

|

||||

.env

|

||||

.next

|

||||

|

||||

yarn-error.log

|

||||

10

examples/with-sitemap-and-robots-express-server/.npmignore

Normal file

10

examples/with-sitemap-and-robots-express-server/.npmignore

Normal file

|

|

@ -0,0 +1,10 @@

|

|||

.build/

|

||||

.next/

|

||||

.vscode/

|

||||

node_modules/

|

||||

|

||||

README.md

|

||||

|

||||

.env

|

||||

.eslintrc.js

|

||||

.gitignore

|

||||

64

examples/with-sitemap-and-robots-express-server/README.md

Normal file

64

examples/with-sitemap-and-robots-express-server/README.md

Normal file

|

|

@ -0,0 +1,64 @@

|

|||

[](https://deploy.now.sh/?repo=https://github.com/zeit/next.js/tree/master/examples/with-sitemap-and-robots)

|

||||

|

||||

# Example with sitemap.xml and robots.txt using Express server

|

||||

|

||||

## How to use

|

||||

|

||||

### Using `create-next-app`

|

||||

|

||||

Execute [`create-next-app`](https://github.com/segmentio/create-next-app) with [Yarn](https://yarnpkg.com/lang/en/docs/cli/create/) or [npx](https://github.com/zkat/npx#readme) to bootstrap the example:

|

||||

|

||||

```bash

|

||||

npx create-next-app --example with-sitemap-and-robots-express-server with-sitemap-and-robots-express-server-app

|

||||

# or

|

||||

yarn create next-app --example with-sitemap-and-robots-express-server with-sitemap-and-robots-express-server-app

|

||||

```

|

||||

|

||||

### Download manually

|

||||

|

||||

Download the example [or clone the repo](https://github.com/zeit/next.js):

|

||||

|

||||

```bash

|

||||

curl https://codeload.github.com/zeit/next.js/tar.gz/canary | tar -xz --strip=2 next.js-canary/examples/with-sitemap-and-robots-expres-server

|

||||

cd with-sitemap-and-robots-express-server

|

||||

```

|

||||

|

||||

Install it and run:

|

||||

|

||||

```bash

|

||||

npm install

|

||||

npm run dev

|

||||

# or

|

||||

yarn

|

||||

yarn dev

|

||||

```

|

||||

|

||||

Deploy it to the cloud with [now](https://zeit.co/now) ([download](https://zeit.co/download))

|

||||

|

||||

```bash

|

||||

now

|

||||

```

|

||||

|

||||

## The idea behind the example

|

||||

|

||||

This example app shows you how to set up sitemap.xml and robots.txt files for proper indexing by search engine bots.

|

||||

|

||||

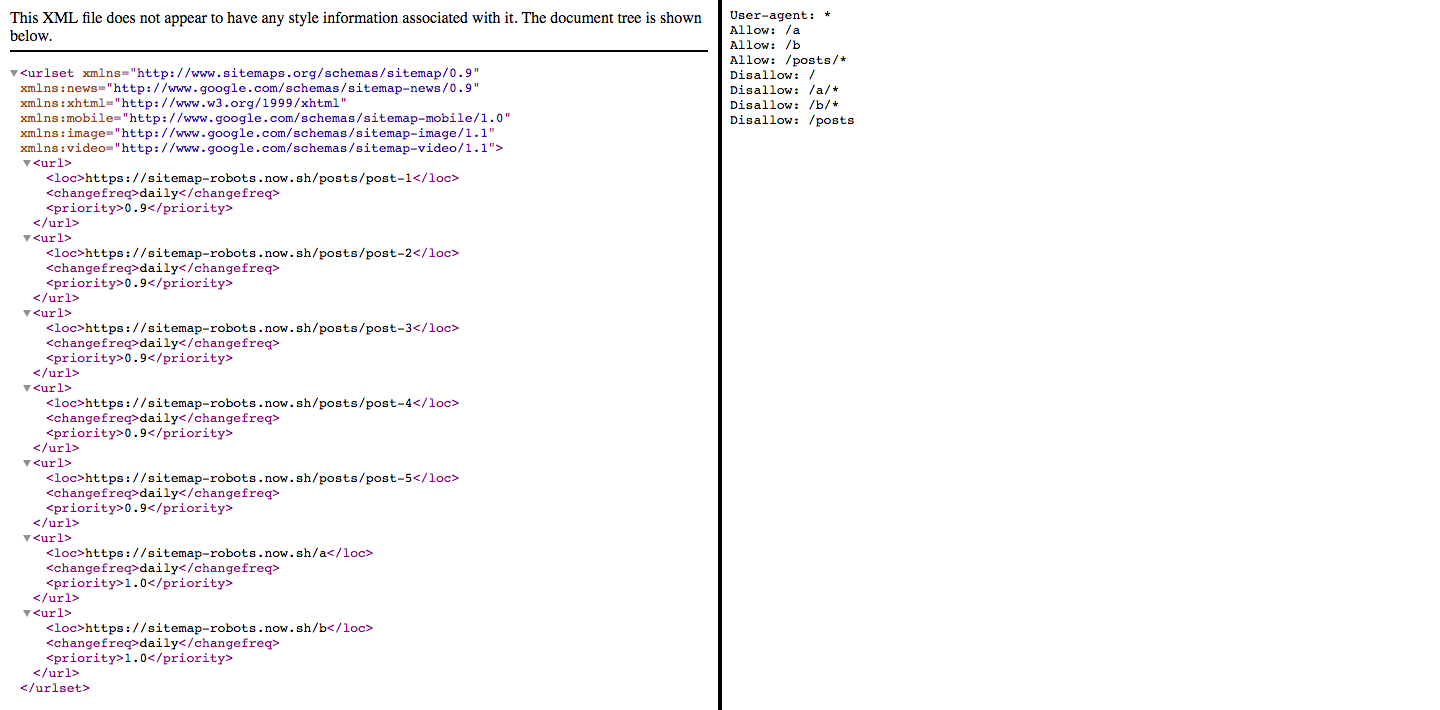

The app is deployed at: https://sitemap-robots.now.sh. Open the page and click the links to see sitemap.xml and robots.txt. Here is a snapshot of these files, with sitemap.xml on the left and robots.txt on the right:

|

||||

|

||||

|

||||

Notes:

|

||||

- routes `/a` and `/b` are added to sitemap manually

|

||||

- routes that start with `/posts` are added automatically to sitemap; in a real application, you will get post slugs from a database

|

||||

|

||||

When you start this example locally:

|

||||

- your app with run at https://localhost:8000

|

||||

- sitemap.xml will be located at http://localhost:8000/sitemap.xml

|

||||

- robots.txt will be located at http://localhost:8000/robots.txt

|

||||

|

||||

In case you want to deploy this example, replace the URL in the following locations with your own domain:

|

||||

- `hostname` in `server/sitemapAndRobots.js`

|

||||

- `ROOT_URL` in `server/app.js`

|

||||

- `Sitemap` at the bottom of `robots.txt`

|

||||

- `alias` in `now.json`

|

||||

|

||||

Deploy with `now` or with `yarn now` if you specified `alias` in `now.json`

|

||||

12

examples/with-sitemap-and-robots-express-server/now.json

Normal file

12

examples/with-sitemap-and-robots-express-server/now.json

Normal file

|

|

@ -0,0 +1,12 @@

|

|||

{

|

||||

"env": {

|

||||

"NODE_ENV": "production"

|

||||

},

|

||||

"scale": {

|

||||

"sfo1": {

|

||||

"min": 1,

|

||||

"max": 1

|

||||

}

|

||||

},

|

||||

"alias": "https://sitemap-robots.now.sh"

|

||||

}

|

||||

26

examples/with-sitemap-and-robots-express-server/package.json

Normal file

26

examples/with-sitemap-and-robots-express-server/package.json

Normal file

|

|

@ -0,0 +1,26 @@

|

|||

{

|

||||

"name": "with-sitemap-and-robots-express-server",

|

||||

"version": "1.0.0",

|

||||

"license": "MIT",

|

||||

"scripts": {

|

||||

"build": "next build",

|

||||

"start": "node server/app.js",

|

||||

"dev": "nodemon server/app.js --watch server",

|

||||

"now": "now && now alias"

|

||||

},

|

||||

"dependencies": {

|

||||

"express": "^4.16.2",

|

||||

"next": "latest",

|

||||

"react": "^16.2.0",

|

||||

"react-dom": "^16.2.0",

|

||||

"sitemap": "^1.13.0"

|

||||

},

|

||||

"devDependencies": {

|

||||

"eslint": "^4.15.0",

|

||||

"eslint-config-airbnb": "^16.1.0",

|

||||

"eslint-plugin-import": "^2.8.0",

|

||||

"eslint-plugin-jsx-a11y": "^6.0.3",

|

||||

"eslint-plugin-react": "^7.5.1",

|

||||

"nodemon": "^1.14.11"

|

||||

}

|

||||

}

|

||||

|

|

@ -0,0 +1,25 @@

|

|||

/* eslint-disable */

|

||||

import React from 'react'

|

||||

import Head from 'next/head'

|

||||

|

||||

function Index () {

|

||||

return (

|

||||

<div style={{ padding: '10px 45px' }}>

|

||||

<Head>

|

||||

<title>Index page</title>

|

||||

<meta name='description' content='description for indexing bots' />

|

||||

</Head>

|

||||

<p>

|

||||

<a href='/sitemap.xml' target='_blank'>

|

||||

Sitemap

|

||||

</a>

|

||||

<br />

|

||||

<a href='/robots.txt' target='_blank'>

|

||||

Robots

|

||||

</a>

|

||||

</p>

|

||||

</div>

|

||||

)

|

||||

}

|

||||

|

||||

export default Index

|

||||

|

|

@ -0,0 +1,28 @@

|

|||

const express = require('express')

|

||||

const next = require('next')

|

||||

const sitemapAndRobots = require('./sitemapAndRobots')

|

||||

|

||||

const dev = process.env.NODE_ENV !== 'production'

|

||||

|

||||

const port = process.env.PORT || 8000

|

||||

const ROOT_URL = dev

|

||||

? `http://localhost:${port}`

|

||||

: 'https://sitemap-robots.now.sh'

|

||||

|

||||

const app = next({ dev })

|

||||

const handle = app.getRequestHandler()

|

||||

|

||||

// Nextjs's server prepared

|

||||

app.prepare().then(() => {

|

||||

const server = express()

|

||||

|

||||

sitemapAndRobots({ server })

|

||||

|

||||

server.get('*', (req, res) => handle(req, res))

|

||||

|

||||

// starting express server

|

||||

server.listen(port, (err) => {

|

||||

if (err) throw err

|

||||

console.log(`> Ready on ${ROOT_URL}`) // eslint-disable-line no-console

|

||||

})

|

||||

})

|

||||

|

|

@ -0,0 +1,11 @@

|

|||

const posts = () => {

|

||||

const arrayOfPosts = []

|

||||

const n = 5

|

||||

|

||||

for (let i = 1; i < n + 1; i += 1) {

|

||||

arrayOfPosts.push({ name: `Post ${i}`, slug: `post-${i}` })

|

||||

}

|

||||

return arrayOfPosts

|

||||

}

|

||||

|

||||

module.exports = posts

|

||||

|

|

@ -0,0 +1,50 @@

|

|||

const sm = require('sitemap')

|

||||

const path = require('path')

|

||||

const posts = require('./posts')

|

||||

|

||||

const sitemap = sm.createSitemap({

|

||||

hostname: 'https://sitemap-robots.now.sh',

|

||||

cacheTime: 600000 // 600 sec - cache purge period

|

||||

})

|

||||

|

||||

const setup = ({ server }) => {

|

||||

const Posts = posts()

|

||||

for (let i = 0; i < Posts.length; i += 1) {

|

||||

const post = Posts[i]

|

||||

sitemap.add({

|

||||

url: `/posts/${post.slug}`,

|

||||

changefreq: 'daily',

|

||||

priority: 0.9

|

||||

})

|

||||

}

|

||||

|

||||

sitemap.add({

|

||||

url: '/a',

|

||||

changefreq: 'daily',

|

||||

priority: 1

|

||||

})

|

||||

|

||||

sitemap.add({

|

||||

url: '/b',

|

||||

changefreq: 'daily',

|

||||

priority: 1

|

||||

})

|

||||

|

||||

server.get('/sitemap.xml', (req, res) => {

|

||||

sitemap.toXML((err, xml) => {

|

||||

if (err) {

|

||||

res.status(500).end()

|

||||

return

|

||||

}

|

||||

|

||||

res.header('Content-Type', 'application/xml')

|

||||

res.send(xml)

|

||||

})

|

||||

})

|

||||

|

||||

server.get('/robots.txt', (req, res) => {

|

||||

res.sendFile(path.join(__dirname, '../static', 'robots.txt'))

|

||||

})

|

||||

}

|

||||

|

||||

module.exports = setup

|

||||

|

|

@ -0,0 +1,10 @@

|

|||

User-agent: *

|

||||

Allow: /a

|

||||

Allow: /b

|

||||

Allow: /posts/*

|

||||

Disallow: /

|

||||

Disallow: /a/*

|

||||

Disallow: /b/*

|

||||

Disallow: /posts

|

||||

|

||||

Sitemap: https://sitemap-robots.now.sh/sitemap.xml

|

||||

Loading…

Reference in a new issue